Multimaster Replica

Architecture

INTRODUCTION

For the connection of servers in cascade (not in stars necessarily, since a same server may be client and server and not be necessarily at the end of the string), it is necessary to make different configurations.

Here, we are going to explain the things we can do with this configuration, the things we cannot do and which ones are in project but they cannot be used yet.

REQUIREMENTS

| REQUIREMENTS |

|---|

| The server version must be igual or greater than 3.10.269.842. |

| The replica server only will be installed in each server machine. The client is incorporated within the service itself. |

| The hardware requirements are the same ones than for a regular server. |

| The clients cannot move among servers, that is, a replica client is registered in one server from the string and always with that server. |

| A same client with a license cannot replicate more than with the server in which it has been registered. |

At the tables and fields we have the following requirements:

- At the central node we must have the tables and field to replicate by batches with the version 2 or higher.

- At thenodes that operates as clients it must be supported the replica by batches with the version 2 or higher and besides:

- The tabla master_replica_iqueue must have the fields of the client: (STATUS, IDSERVER, DMIDPR and PMAP).

- The tabla master_replica_queue must have the CLNTDISABLED field (whole number).

To avoid problems, the best thing would be putting the iqueue of the client in all the databases and creating the field CLNTDISABLED at the queue for all the databases, which it would allow scaling the servers at any time.

The databases have to monitor at the replicator.ini, something taken for granted, since the iqueue is mandatory, so it will be needed to monitor the database in order the server operates.

CONFIGURATION

Installing the servers in each one of the machines.

A same license of server can be installed in all the machines of the string.

For each one of the servers of the string is necessary to put a different database license, since it will be the way that the different nodes of the string can identify as clients in each one of the upper levels.

In the node which will be central, has to be registered a license for each one of the nodes connected directly to it.

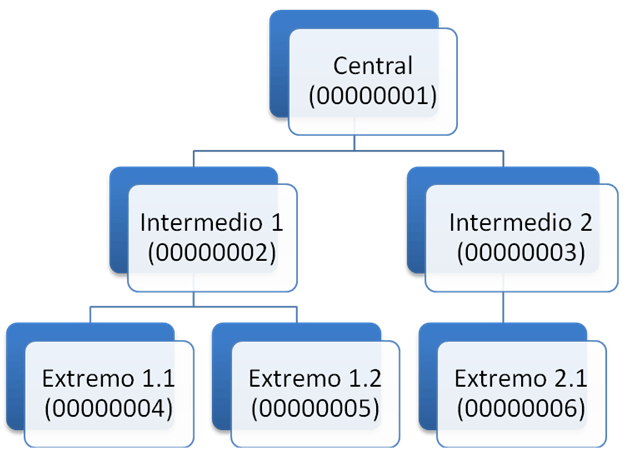

At the Figure 1 a possible hierarchy with example databases licenses is displayed. Thus the central node will be the 00000001 license, and the licenses 4, 5 and 6 are extremes, the 2 and 3 licenses are intermediate nodes.

All the nodes can have connected replica clients, but for these cases there is nothing special to configure, therefore, we are not going to be worried by that.

To connect a server node with its slaves nodes, it is necessary to create licenses to the slaves. In our case, we have to create a license for each intermediate node at the central node.

At the intermediate node 1 we have to create a license for each extreme node (4 and 5) and in the intermediate node 2 we have to create a license for the extreme node 6.

These licenses are generated by using the xonetsetup or by using the xonemanager, since it is about regular clients to replica effect.

To identify them from another possible clients, MID numbers can be used to show clear differences:

(e.g. MID 1000 for the intermediate 1 and MID 1001 for the intermediate 2, etc. This, however, it is not mandatory, any MID number can be used to identify a slave node. )

Figure 1

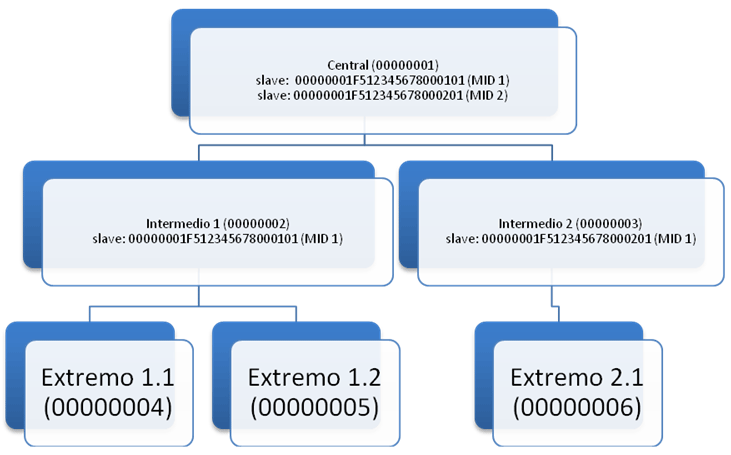

In our case, we are going to create two clients at the central node and we are going to name them 00000001F512345678000101 and 00000001F512345678000201 with the MID 1 and 2 respectively.

Following, we link the intermediate nodes 1 and 2, as it is shown at the Figure 2.

Figure 2

As we can see, at the intermediate server a row in the slave with the same SERIAL that this node has in the central server has been created.

To this MID can be put the number we want to, because no client in this node will replicate with that MID.

At the intermediate node 3 happens exactly the same.

In both nodes the MID 1 has been used as MID of link.

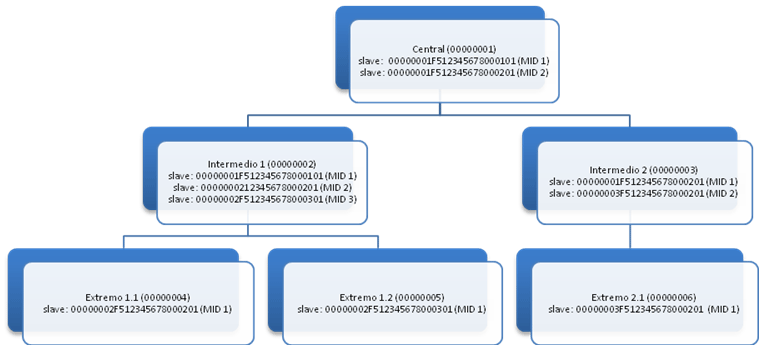

Now, we have to create at the intermediate node 1 the two link clients with its extremes and at the intermediate 2 the link client with the only extreme it has.

At the Figure 3 this task already made is displayed.

Figure 3

Now all the nodes are linked concerning the licenses.

It is necessary to make the following remarks:

- The MID of each one of the nodes will have nothing to do with the MID of the server node. The designer of the system may fix the things to keep the same number or MID between the client node and the server, to make easier the trace of operations. In our case we could respect the MIDs at the hierarchy and spreading them upwards, so that the operations coming from an extreme toward the intermediate node keeps the same MID when go up to the upper node.

- The row inserted at the client node to link with the server it has to be insered by hand, since as we can see, the SERIAL has no numeration of this node but from the server, therefore, a client with this number cannot be registered automatically. Possibly, it is required to be modified the xnetsetup or the xonemanager to make this job, but at the moment this it has to be done by hand.

Following, we have to configure the nodes working as client (the intermediates and the extreme ones) to connect with its superior nodes.

For this it is necessary to touch the rows of the slave inserted with a link license number.

The necessary changes to enter, are the following ones:

- Field DIRIP: Writing the address of the superior node (dir[:port])

- Field ACTION: Writing the word “link”.

Then, we put the data of the links such as they would be in all the linked nodes, on the basis of a list of IP address as the indicated one at the table 1:

| NODE | IP ADDRESS |

|---|---|

| Central Node | 192.168.1.1 |

| Intermediate Node 1 | 192.168.2.1 |

| Intermediate Node 2 | 192.168.3.1 |

| Extreme Node 1.1 | 192.168.4.1 |

| Extreme Node 1.2 | 192.168.5.1 |

| Extreme Node 2.1 | 192.168.6.1 |

TABLE 1

Configuration values

| NODE | IP ADDRESS | ACTION | SERIAL |

|---|---|---|---|

| Intermediate Node 1 | 192.168.1.1 | link | 00000001F512345678000101 |

| Intermediate Node 2 | 192.168.1.1 | link | 00000001F512345678000201 |

| Extreme Node 1.1 | 192.168.2.1 | link | 00000002F512345678000201 |

| Extrme Node 1.2 | 192.168.2.1 | link | 00000002F512345678000301 |

| Extreme Node 2.1 | 192.168.3.1 | link | 00000003F512345678000201 |

The servers to replicate in cascade are already configured.

From this moment, in every server new replica clients can be registered, as is usual before, and the operations will be spreaded among the servers that end up having all the equivalent data.

REPLICATOR.INI

The replica client incorporated has parameters similar than the ones of the independent clients which will be configured at the replicator.ini file in the same way than in the case of the current mobile clients (license.ini).

The section within this file is the same one which the settings of the database are stored in, in such way that each database may keep its own configuration of linked client.

| The keys used, are the following ones |

|---|

| Interval: Type of interval (0: milliseconds, 1: seconds, 2: minutes, 3: hours). |

| Timeout: Amount of time in the intervale previously described. |

The rest of the values can be at the INI file, they are the usual ones at the version Win32, but in this case we do not put it. If somebody needs them, they can be requested.

FUNCTIONING

When a regular replica client (both, a terminal or an integration interface) pass via replica operations on a node that works as server and clients, this one receives the operations in the same way as always.

Once these operations have been executed and are in the replica queue, the client module detects that has operations to send and it connects with the superior node to send them.

These operations will come to the superior node withthe MID that this node has on its clients list, whatever the original MID which generated them, so, once the operations go up in level they lose the reference of who is the one that generated them.

When the node ends to upload the operations, it starts downloading the ones availables and inserts them at the iqueue as if was a regular replica client.

The execution work of the iqueue from the server will identify these operations as coming from a client´s work because it knows the link MID, so it will execute them by following the necessary rules (first the ones having the DMID status and then the ones having the regular status, etc.) Otherwise the execution of operations is similar for both those coming from customers and those from higher servers in terms of resolution of mappings, conflicts, etc.

If we want the central node to operate as a data collector and never spread data to the lower nodes, just putting the link clients as conditional and leave their

selected empty. In this way, no operation will ever pass to squeue for these clients and they will never have operations to download.

The intermediate nodes can be configured in the same way. With this scheme what would happen is that we would have a central server with all the data coming from all the replication clients and intermediate servers with subsets of data that would correspond only to each of the client groups that they support.

This allows having a system of isolated delegations and without the risk to mix data in each one of the delegations. At the central all the data would be picked up (e.g. orders, invoices, etc.) which are made in each delegation.

If the clients are configured without selectivity, we would have backups equivalents to the central database in each node, so we would have the opposite case: a central database distributed among all the servers.

FILES REPLICA

Such module is not implemented in this version.

CLIENTS ROAMING

So far, there are conceptual issues not solved yet at design level.

At the moment, the structure is: fixed clients in its nodes and data spreading among servers.

The clients cannot change server dynamically.